Aggie Robotics🔗︎

The Game Begins🔗︎

I have been interested in robotics for a while. I considered joining the robotics club in high school, but I didn't have enough time to commit to the team. Once I started attending Texas A&M, I was thrilled to find out that the Aggie Robotics team is very active and regularly advances to the VEX U World Championships!

Each year, the VEX organization creates a game to be played competitively with robots. For the 2022-2023 season, the game was called Spin Up. It was an ultimate-frisbee-like challenge to be played both with a driver and autonomously.

I was interested in programming Team WHOOP's robots. The team wanted to incorporate computer vision algorithms to assist in aiming the robot's disk launcher towards the goal. I did not have any experience with analyzing video or interacting with low-level hardware, but I am always looking to step out of my comfort zone to expand my abilities.

Tinkering With Hardware🔗︎

Since I have always worked exclusively with software, I was unsure where to begin. Each VEX robot contains a proprietary microcontroller called a "VEX Brain". It has a 12V power source, 21 proprietary "Smart Ports", 8 3-wire sensor ports, and a single Micro-USB port typically used to upload programs or pair controllers. While a camera could be connected directly to one of these ports, there are a few issues with running vision code directly on the Brain:

- The VEX Brain needs most ports to connect to motors, odometry sensors, and the radio

- Robot processing needs to react quickly to user input and sensors, so excessive load from vision processing should be avoided

- Every port except Micro-USB is connected to the main power source

- The Micro-USB port is notoriously brittle and damaging it would be detrimental to the robot

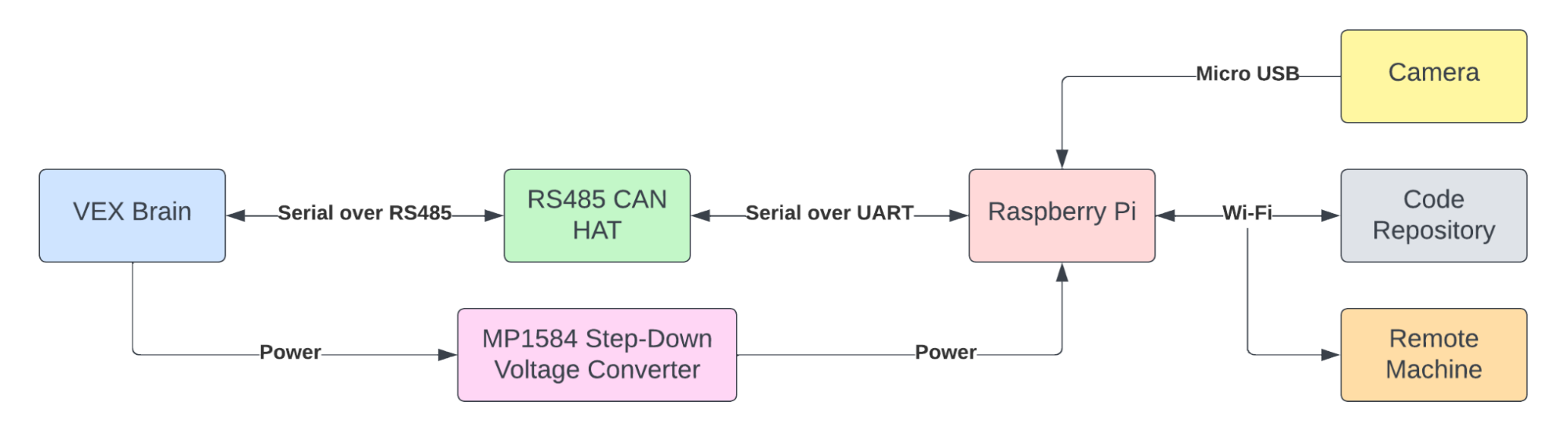

For these reasons, I decided that it would be best to attach an external microcontroller to the robot. This would also make it easier to iterate on my vision code separate from the robot. I obtained a Raspberry Pi Model 3 A+ for this purpose. The next step was to figure out how it would be powered and communicate with the Brain. An external battery could be used, but this would add additional weight to the robot and complicate our pre-flight checks. I decided to connect the Pi to the Brain through one of the Smart Ports since they are most available, they can be operated as serial ports, and they have adequate bandwidth. The main problem with this is that the 12V power from this port is too much for the Raspberry Pi. To step down the voltage, I wired an MP1584 converter to the end of a Smart Cord. The data lines were connected to an RS485 CAN HAT, allowing the Pi to communicate with the Brain with its UART port using the CAN protocol. Unfortunately, the Raspberry Pi's UART port can only be used for either raw serial communication or terminal access. This is inconvenient, but the Pi can also be accessed wirelessly.

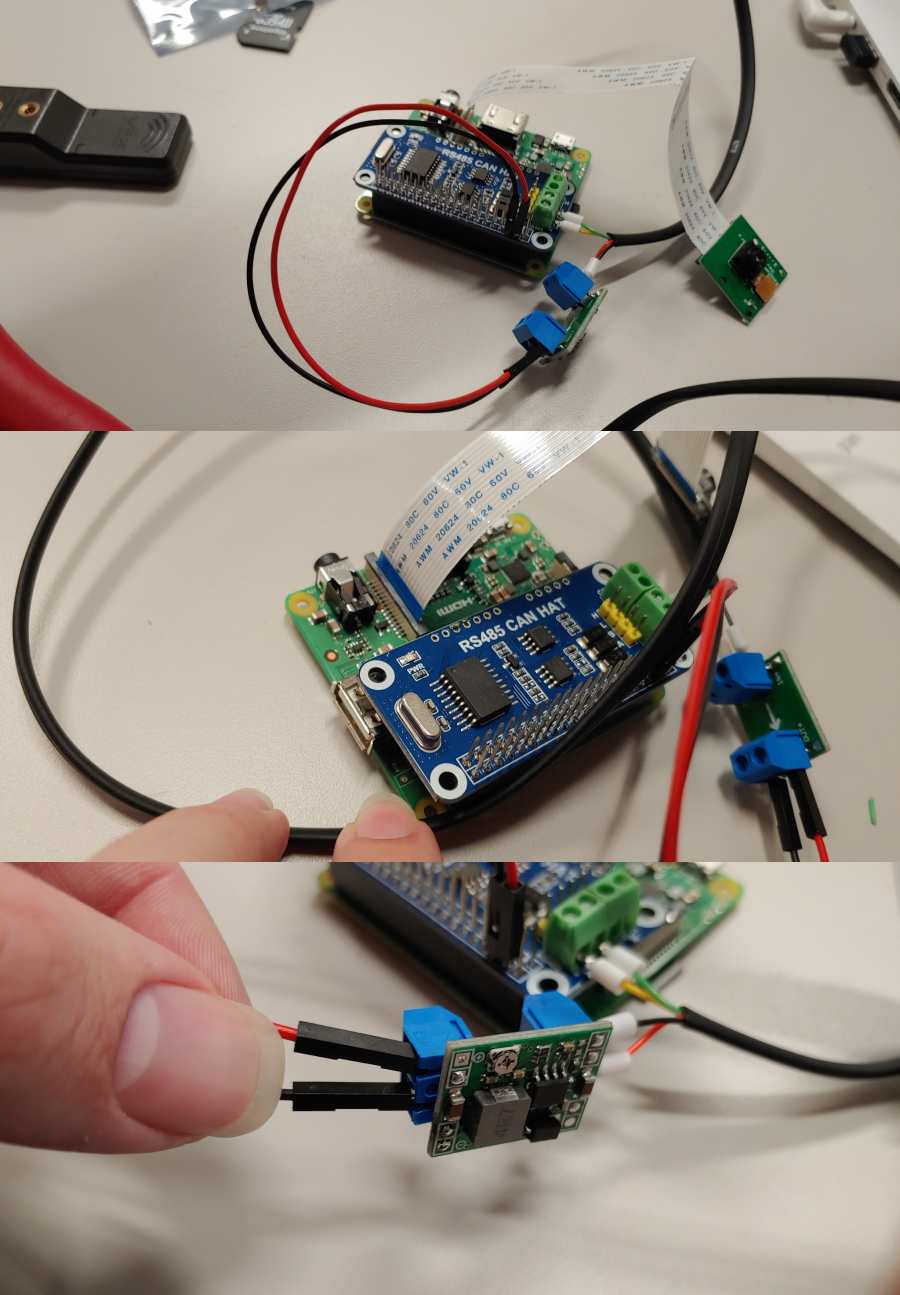

With the system designed, it was time to build it! Assembly required some solder to connect everything. The voltage converter also had to be calibrated to provide the proper 3.3V to the Raspberry Pi's power pins.

Top: Complete Assembly Including Camera

Center: RS485 CAN HAT

Bottom: MP1584 Step-Down Voltage Converter

Giving A Robot Sight🔗︎

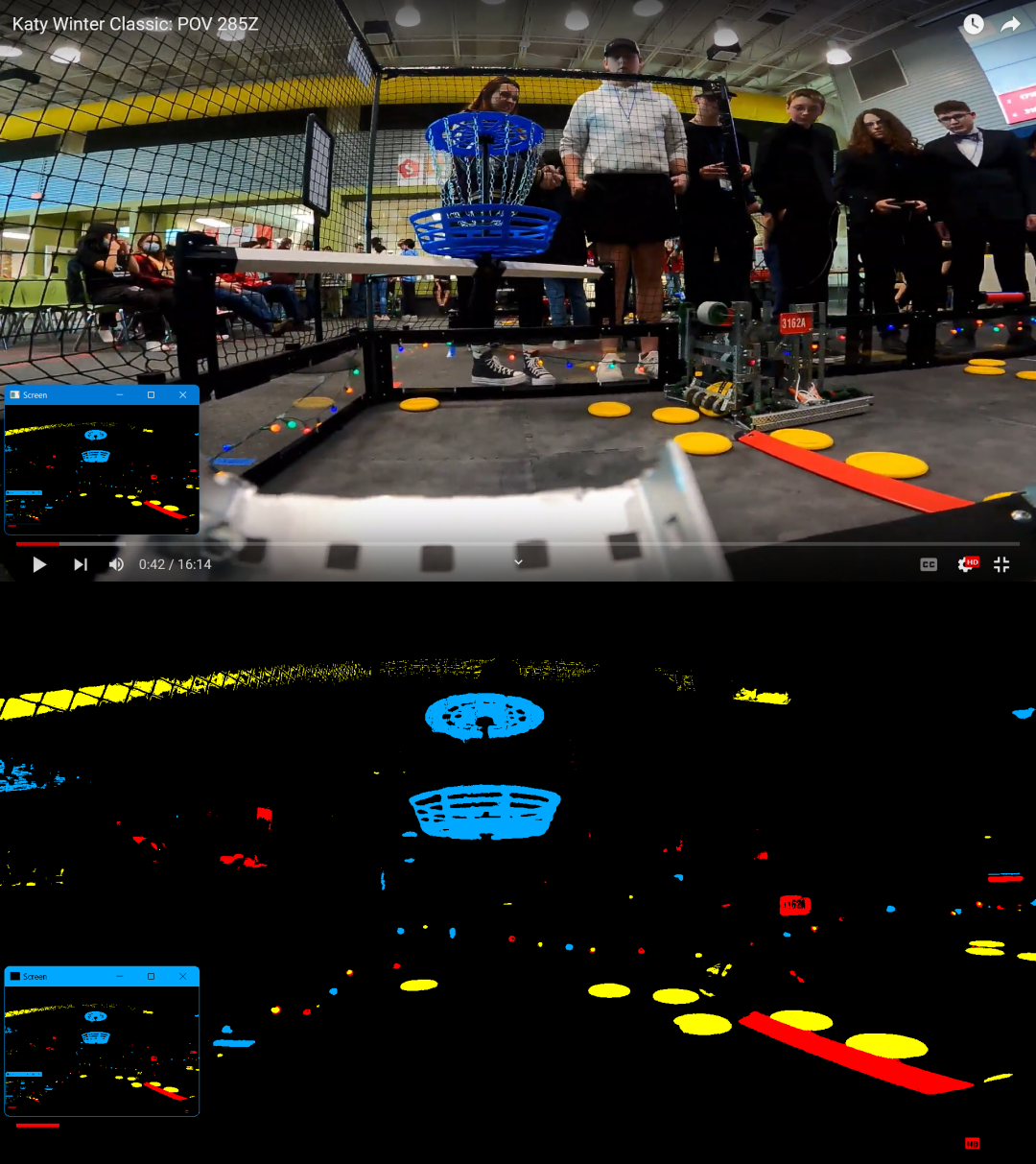

After building the Raspberry Pi assembly, it was time to start testing some vision code! This code needs to be performant to run in real time. VEX robots move quickly and the robot needs to react to its sensors within milliseconds, not seconds. Processing hundreds of thousands of pixels in real time is no simple task, especially in a resource-constrained environment. I needed to significantly reduce the amount of data being processed. The first method I used was to perform simple component-wise thresholding on each pixel since all the important field elements are bright colors. There is a red goal, a blue goal, and yellow disks, so I created separate binary masks for each of these colors. I initially had limited success with this method. When I tested my program with several match videos under different lighting conditions, I was unable to find consistent RGB ranges that would separate the field elements consistently. I needed to reevaluate my approach.

I took a step back and thought about how others may have solved this problem. I began thinking about photo editors. Many editors have a "smart select" mode that uses some sort of color similarity function to determine which pixels are likely to be part of the same object. I researched how this function works. The color similarity problem has many solutions that work well in different contexts. The main issue that I was having was that my current algorithm was sensitive to changes in lighting conditions. In my research, I discovered that many color similarity formulas use the L*a*b* color space to perform this calculation. This color space is unique in that it is perceptually uniform, meaning that Euclidean distance roughly corresponds with human perception of color difference. This property makes thresholding in this space extremely effective!

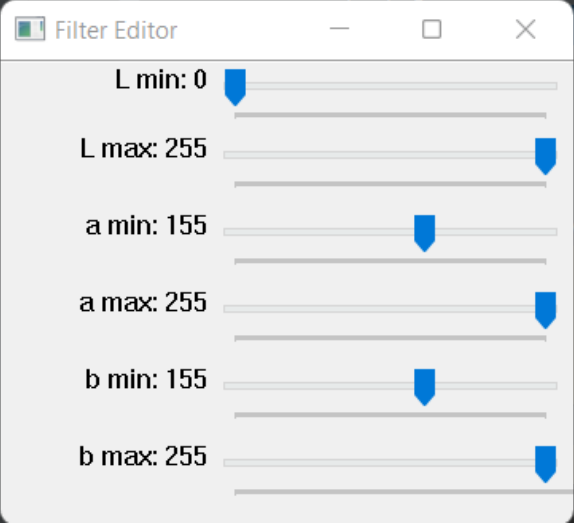

I created a program to test my vision code on my computer using my screen as input. This allowed me to easily test different algorithms on various video sources. I also added a window where I could adjust my thresholds on the fly to fine-tune them.

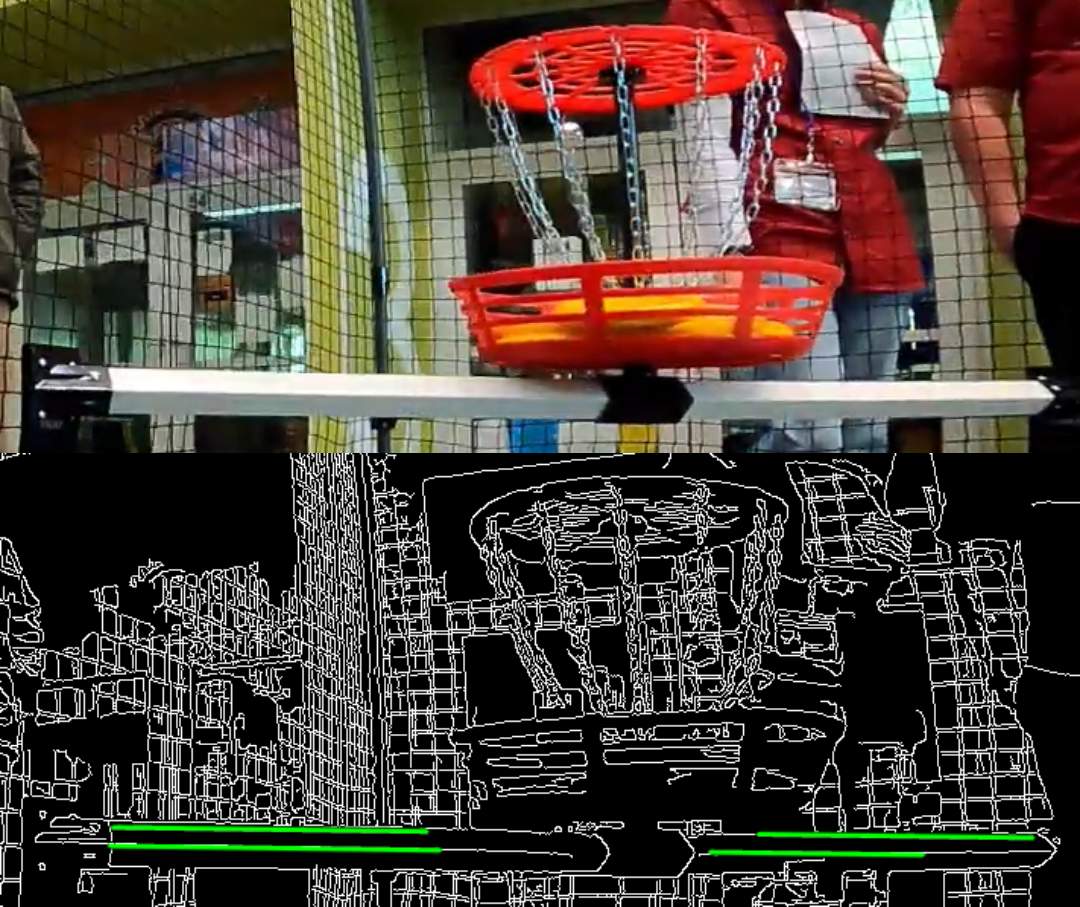

The next step was to create a goal detection algorithm. I started out by simply detecting red or blue blobs, but I was getting false positives from people wearing red or blue shirts behind the arena. I looked for other defining features near the goal that I could use to identify it. There was the net, but it is difficult to identify at low resolutions, at oblique angles, or when there is a dark background. There are large bars on the ground, but these can be obscured by robots and cannot be seen when our robot is close to the goal. However, there are two large silver bars that attach the goal to the arena. If I could identify those bars, I could use that information to verify which blob is the goal in the threshold mask.

I had trouble identifying these bars with additional thresholds. Since they are silver, color information is not very helpful. I tried thresholding based on brightness or lightness, but any other bright color would be included in the mask. I decided to use the more sophisticated method of edge detection. I used the Canny algorithm, which convolves a Gaussian kernel to find contours in the image. This creates an edge mask that I can use to detect long horizontal edges with a Hough Transform.

With this algorithm in place, the Raspberry Pi finally has enough information to locate the goal! But how will that data be communicated to the Brain?

Putting It All Together🔗︎

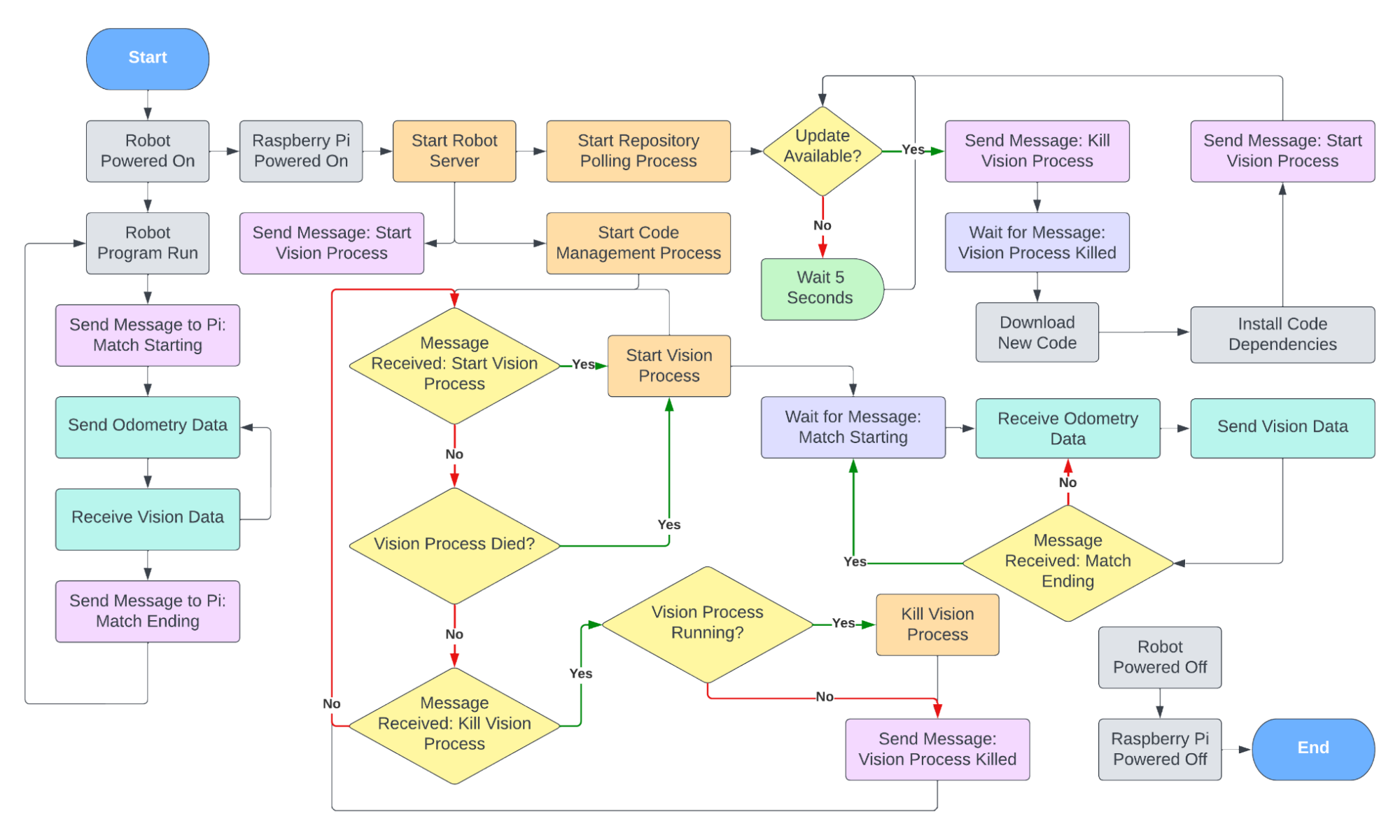

The communication protocol between the Brain and the Pi is nontrivial. In the middle of a match, the messages are relatively simple, but there is a certain amount of coordination required to ensure that the vision program is running and remains running during the match. There is also the issue of code updates. New code needs to be downloaded to the Pi when the repository is updated. I designed a separate server program that runs as a daemon on the Raspberry Pi to manage the application lifecycle. It functions as a state machine. Special messages created by Google's Protobuf library are exchanged to coordinate between the robot and the vision process. Their interaction is outlined below.

Reflection🔗︎

Despite this competition being a team sport, I mostly worked alone. I was given a task, but I had complete control over how every aspect of it was completed. Much like AgTern, I enjoyed solving unfamiliar challenges and managing a large code base. I learned a lot about communication protocols and computer vision algorithms!

Match Videos🔗︎

Texas State Championship

Match F1

World Championship

Research Division

Match SF2

Documentation🔗︎

The following technical reports were written by me for the purpose of documenting the work that I did for Aggie Robotics. They contain additional information about the implementation of the projects that I worked on.

- Embedded Systems Integration (PDF)

- Embedded Systems Software Integration (PDF)

- Computer Vision Targeting System Development (PDF)

Awards🔗︎

- VEX U Robotics - Spin Up (Fall 2023 to Spring 2024)

- Texas Tournament

- Overall - 3rd Place Team

- Skills Competition - 1st Place Team

- Tournament Champion Award

- Robot Skills Champion Award

- Excellence Award

- World Championship

- Overall - 10th Place Team

- Skills Competition - 12th Place Team

- Energy Award

- Texas Tournament